Introduction

Turn Prompts into Protocols

ReasonKit is a structured reasoning engine that forces AI to show its work. Every angle explored. Every assumption exposed. Every decision traceable.

The Problem

Most AI responses sound helpful but miss the hard questions.

You ask: “Should I take this job offer?”

AI says: “Consider salary, benefits, and culture fit.”

What’s missing: Manager quality, team turnover, company trajectory, your leverage, opportunity cost, where people go after 2-3 years…

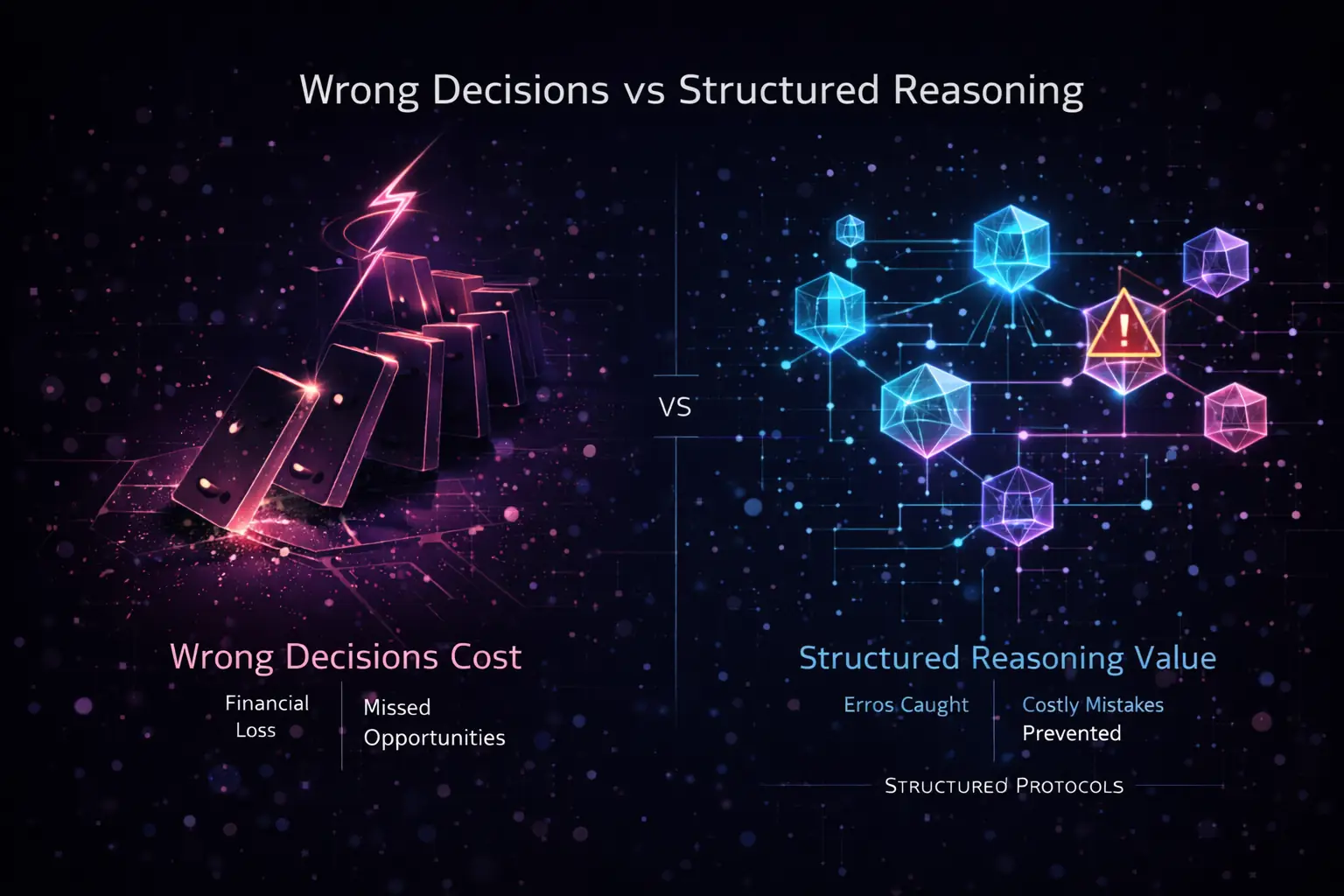

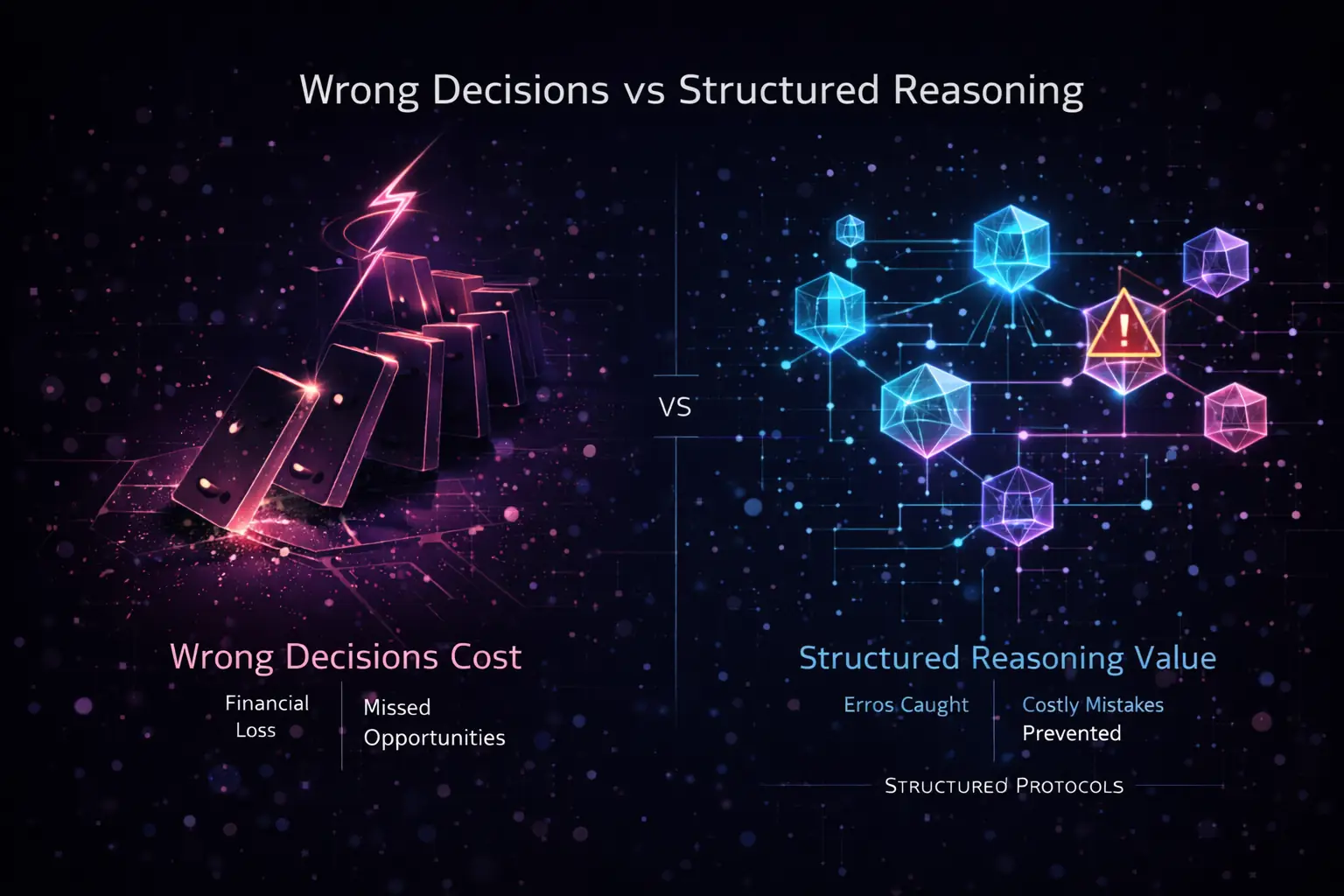

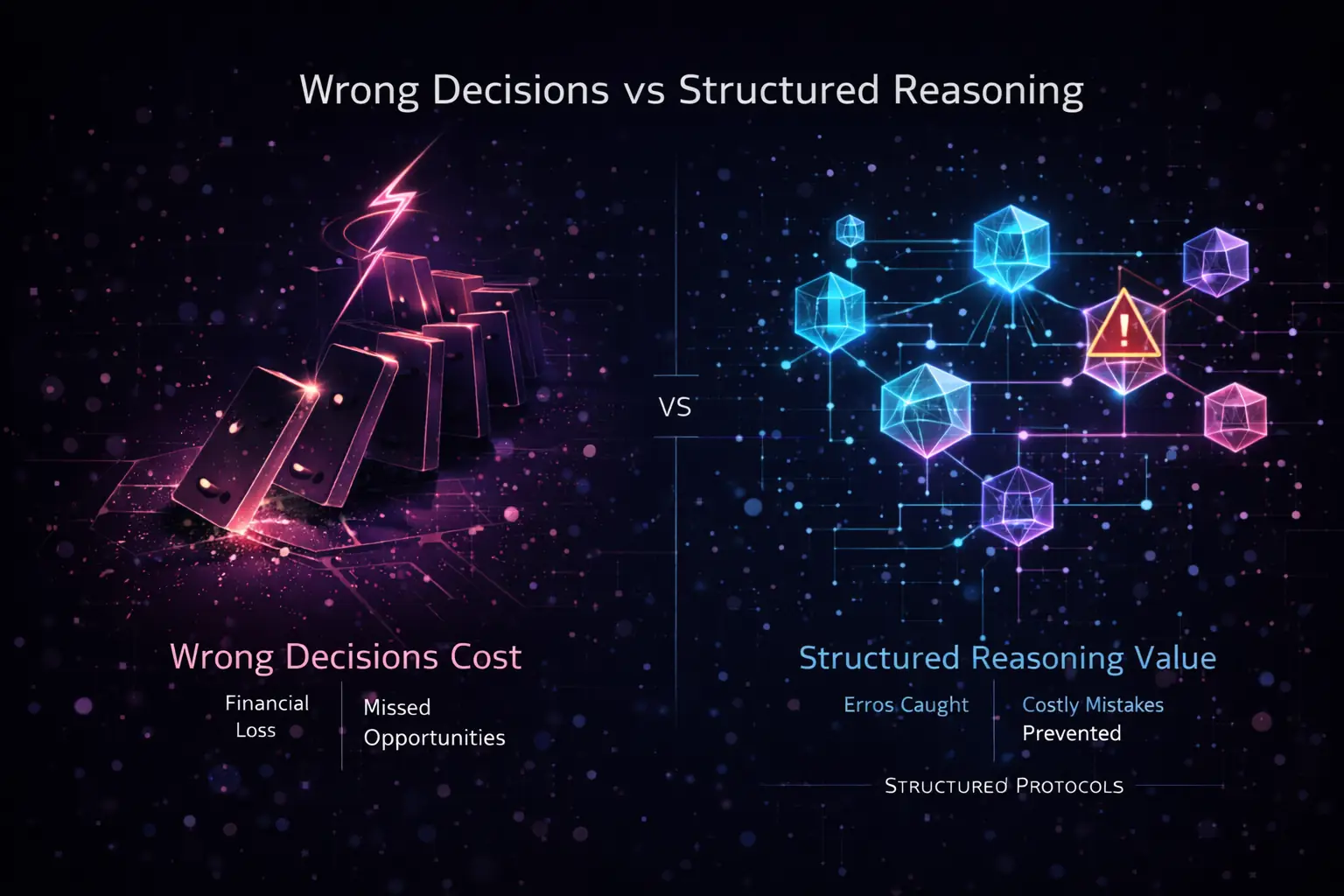

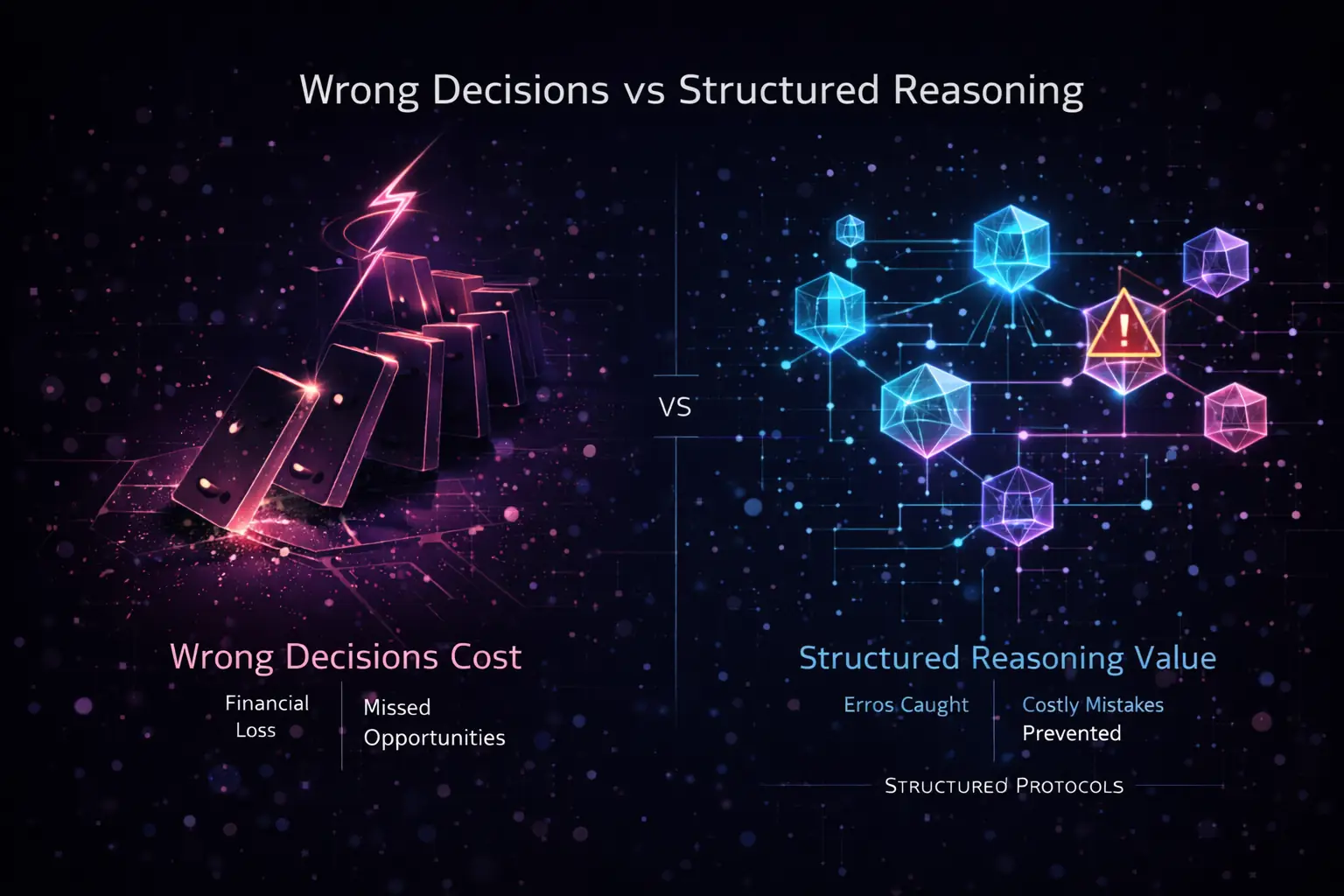

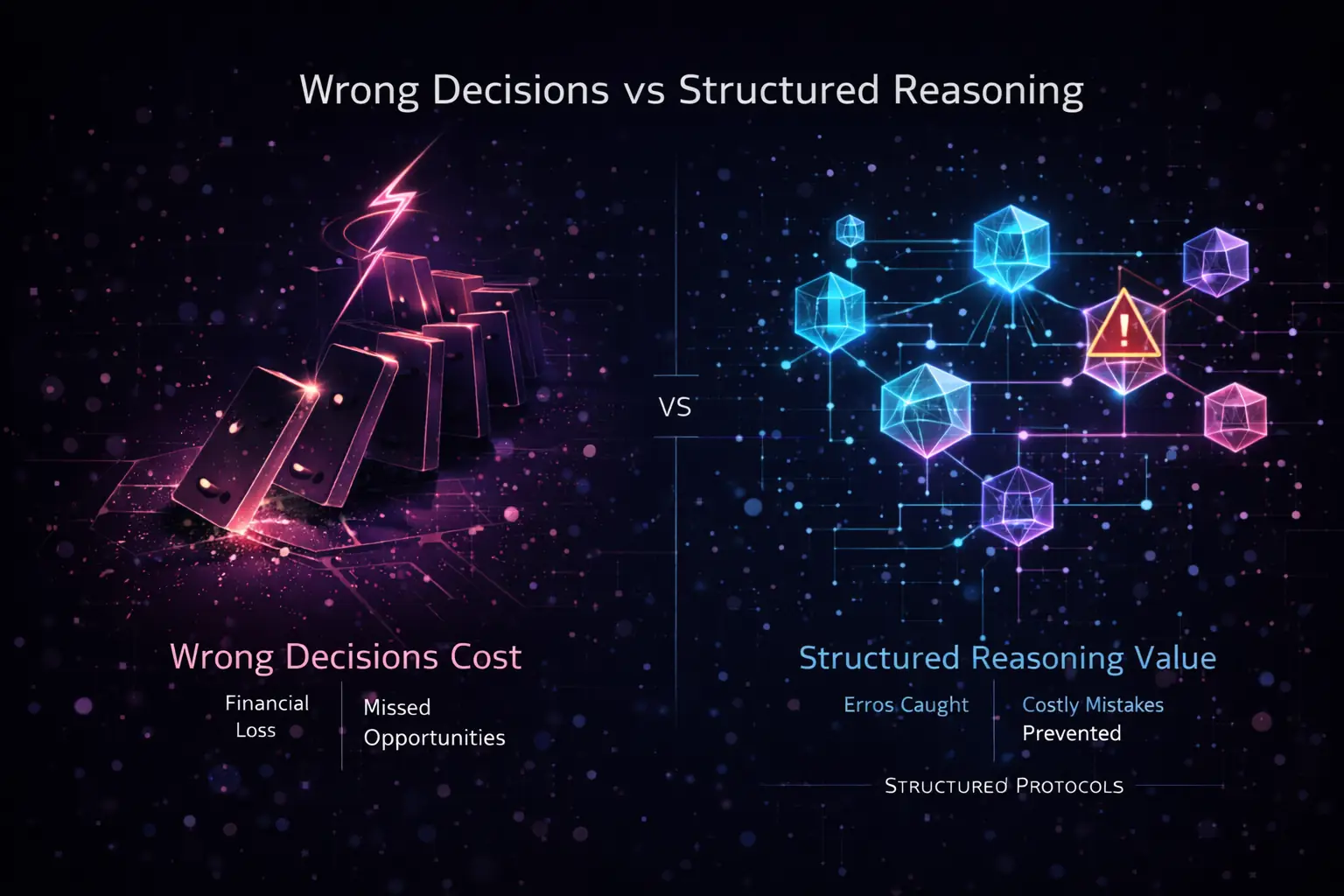

The Cost of Wrong Decisions: Without structured reasoning, decisions lead to financial loss and missed opportunities. With structured protocols, errors are caught and costly mistakes are prevented before they compound.

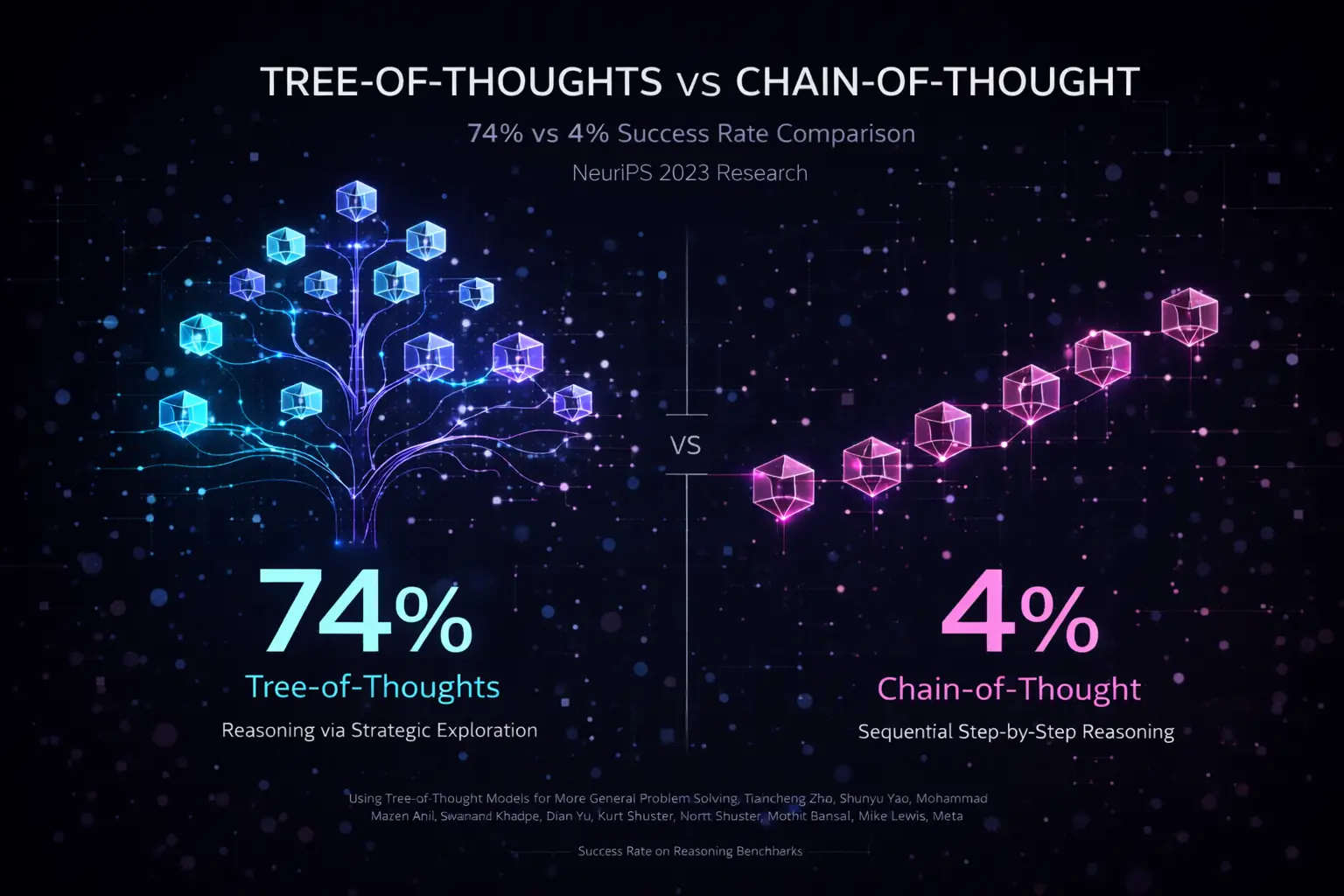

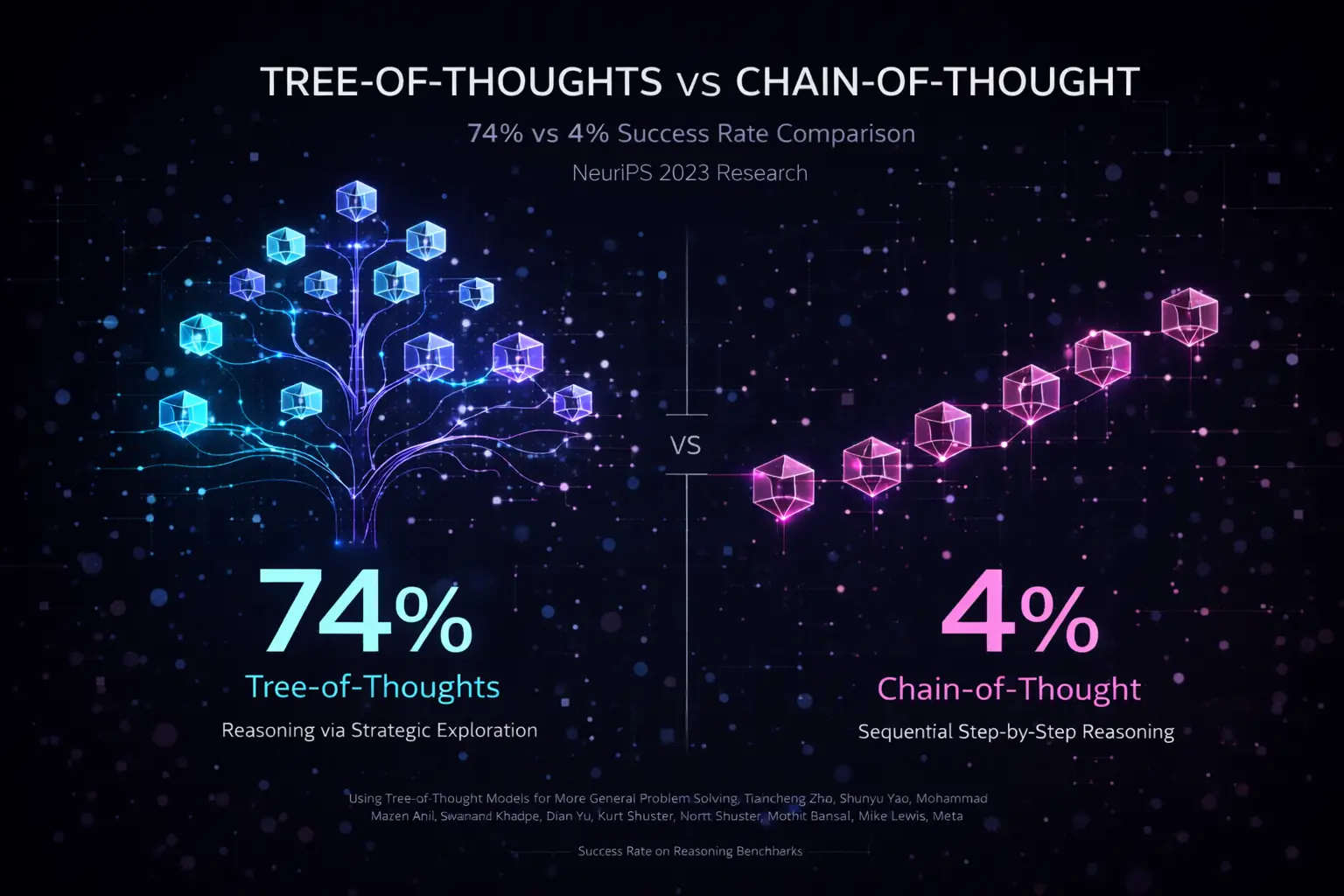

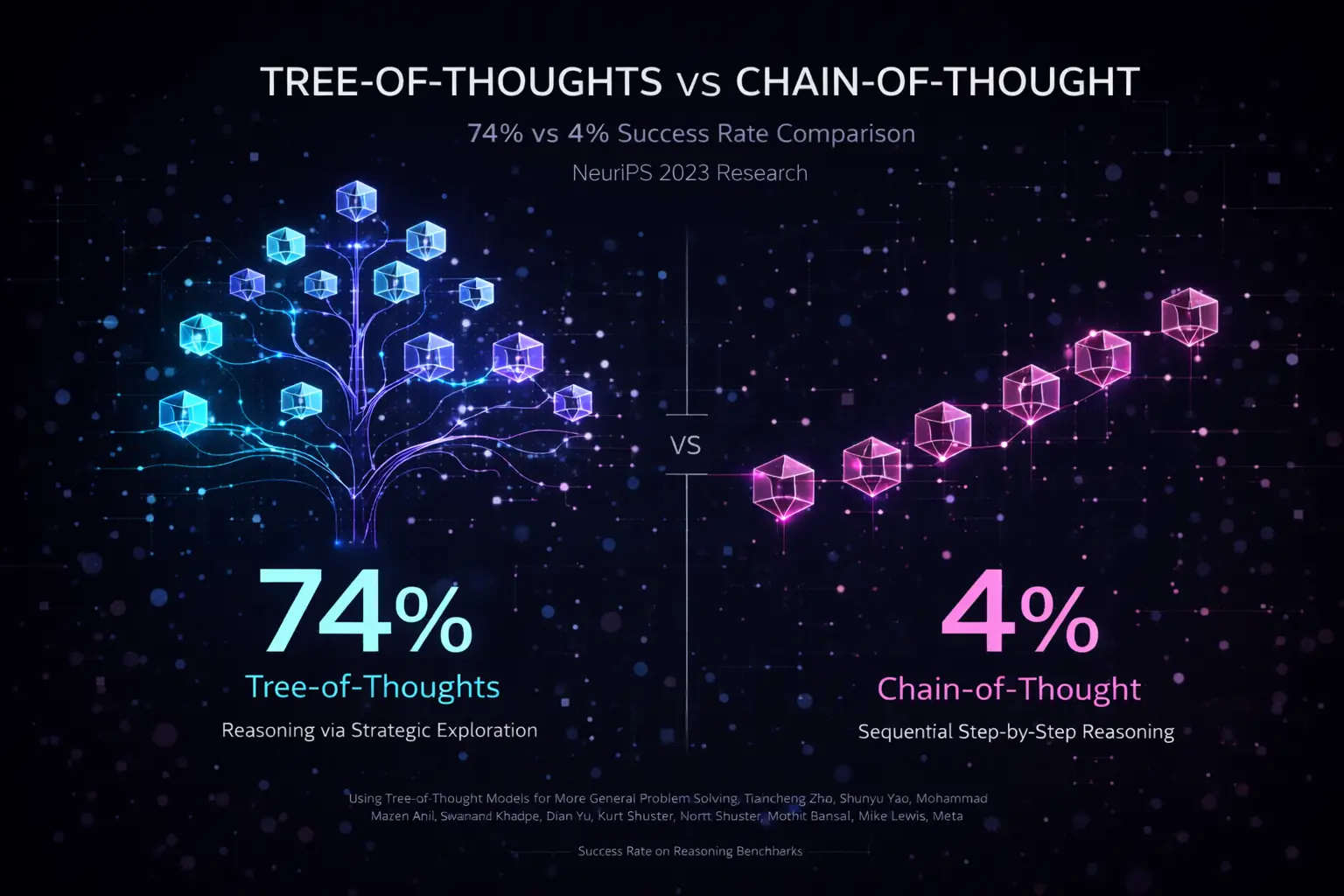

The Research: Tree-of-Thoughts reasoning achieved 74% success rate vs 4% for Chain-of-Thought on complex reasoning benchmarks (Yao et al., NeurIPS 2023). This dramatic difference shows why structured, multi-path exploration beats linear step-by-step thinking.

ReasonKit solves this by making AI reasoning structured, auditable, and reliable.

The Solution: ThinkTools

ReasonKit provides five specialized ThinkTools, each designed to catch a specific type of oversight:

| Tool | Purpose | Catches |

|---|---|---|

| GigaThink | Explore all angles | Perspectives you forgot |

| LaserLogic | Check reasoning | Flawed logic hiding in cliches |

| BedRock | Find first principles | Simple answers under complexity |

| ProofGuard | Verify claims | “Facts” that aren’t true |

| BrutalHonesty | See blind spots | The gap between plan and reality |

The 5-Step Process

Every deep analysis follows this pattern:

1. DIVERGE (GigaThink) → Explore all angles

2. CONVERGE (LaserLogic) → Check logic, find flaws

3. GROUND (BedRock) → First principles, simplify

4. VERIFY (ProofGuard) → Check facts, cite sources

5. CUT (BrutalHonesty) → Be honest about weaknesses

Quick Example

# Install

cargo install reasonkit-core

# Ask a question with structured reasoning

rk think "Should I ask for a raise or look for a new job?" --profile balanced

Philosophy

Designed, Not Dreamed — Structure beats intelligence.

ReasonKit doesn’t make AI “smarter.” It makes AI show its work. The value is:

- Structured output — Not a wall of text, but organized analysis

- Auditability — See exactly what each tool caught

- Catching blind spots — Five tools for five types of oversight

Who Is This For?

Anyone Making Decisions

- Job offers, purchases, life changes

- Career pivots, relationship decisions

- Side projects and business ideas

Professionals

- Strategic planning and due diligence

- Research synthesis and fact-checking

- Risk assessment and compliance

Teams

- Architecture decisions

- Product strategy

- Investment analysis

- Hiring decisions

Next Steps

- Quick Start — Get running in 30 seconds

- ThinkTools Overview — Deep dive into each tool

- Use Cases — See real examples

Open Source

ReasonKit is open source under the Apache 2.0 license.

- Free forever: 5 core ThinkTools + PowerCombo

- Self-host: Run locally, own your data

- Extensible: Create custom ThinkTools

Learning Path: Developer

For: Software engineers, technical leads, and developers building with ReasonKit

This learning path guides you through ReasonKit from a technical implementation perspective.

🎯 Goal

Build applications that integrate ReasonKit’s structured reasoning capabilities into your software.

📚 Path Overview

Phase 1: Foundation (30 minutes)

- Quick Start - Get ReasonKit running locally

- Installation - Install via Cargo, npm, or Python

- Your First Analysis - Run your first ThinkTool

Outcome: You can execute ThinkTools from the CLI.

Phase 2: Integration (1-2 hours)

- Rust API - Use ReasonKit as a Rust library

- Python Bindings - Integrate with Python applications

- Output Formats - Parse and process results programmatically

- Integration Patterns - Common integration patterns

Outcome: You can integrate ReasonKit into your application.

Phase 3: Advanced Usage (2-3 hours)

- Architecture - Understand the system design

- Custom ThinkTools - Create your own reasoning protocols

- LLM Providers - Configure different LLM backends

- Performance Tuning - Optimize for your use case

Outcome: You can customize and optimize ReasonKit for production.

Phase 4: Production (1-2 hours)

- CLI Reference - Complete command reference

- Configuration - Production configuration

- Troubleshooting - Debug common issues

Outcome: You can deploy ReasonKit in production environments.

🛠️ Quick Reference

Common Tasks

Integrate in Rust:

#![allow(unused)]

fn main() {

use reasonkit_core::thinktool::{ProtocolExecutor, ProtocolInput};

let executor = ProtocolExecutor::new()?;

let result = executor.execute("gigathink", ProtocolInput::query("Your question")).await?;

}Integrate in Python:

import reasonkit

executor = reasonkit.ProtocolExecutor()

result = executor.execute("gigathink", query="Your question")

CLI Usage:

rk think "Your question" --profile balanced

📖 Related Documentation

- API Reference - Complete Rust API

- CLI Reference - Command-line interface

- Architecture - System design

- Contributing - Development setup

🎓 Next Steps

After completing this path:

- Build a custom ThinkTool for your domain

- Integrate ReasonKit into your production application

- Contribute improvements back to the project

Estimated Time: 4-7 hours

Difficulty:

Intermediate to Advanced

Prerequisites:

Familiarity with Rust or Python

Learning Path: Decision-Maker

For: Business leaders, product managers, executives, and anyone making strategic decisions

This learning path helps you use ReasonKit to make better decisions with structured reasoning.

🎯 Goal

Use ReasonKit to analyze decisions, identify blind spots, and make more informed choices.

📚 Path Overview

Phase 1: Getting Started (15 minutes)

- Introduction - Understand what ReasonKit does

- Quick Start - Run your first analysis

- Your First Analysis - See structured reasoning in action

Outcome: You understand how ReasonKit improves decision-making.

Phase 2: Understanding ThinkTools (30-45 minutes)

- ThinkTools Overview - How each tool works

- GigaThink - Explore all angles

- LaserLogic - Check logic and find flaws

- BedRock - Find first principles

- ProofGuard - Verify facts

- BrutalHonesty - Identify blind spots

Outcome: You know which ThinkTool to use for different situations.

Phase 3: Using Profiles (20 minutes)

- Understanding Profiles - When to use which profile

- Quick Profile - Fast decisions (70% confidence)

- Balanced Profile - Standard analysis (80% confidence)

- Deep Profile - Thorough analysis (85% confidence)

- Paranoid Profile - Maximum rigor (95% confidence)

Outcome: You can choose the right profile for your decision’s importance.

Phase 4: Real-World Applications (1-2 hours)

- Career Decisions - Job offers, promotions, pivots

- Financial Decisions - Investments, purchases, budgets

- Business Strategy - Strategic planning, market analysis

- Fact-Checking - Verify claims and sources

Outcome: You can apply ReasonKit to your specific decision-making needs.

Phase 5: Advanced Usage (30 minutes)

- PowerCombo - Maximum rigor for critical decisions

- Custom Profiles - Tailor profiles to your needs

- CLI Options - Fine-tune your analysis

Outcome: You can customize ReasonKit for your specific use cases.

💡 Decision Framework

When to Use Which Profile

| Decision Importance | Profile | Confidence | Time |

|---|---|---|---|

| Low (lunch choice) | Quick | 70% | 30 seconds |

| Medium (software purchase) | Balanced | 80% | 2-3 minutes |

| High (job change) | Deep | 85% | 5-10 minutes |

| Critical (major investment) | Paranoid | 95% | 15-30 minutes |

Common Decision Patterns

Career Decisions:

rk think "Should I take this job offer?" --profile deep

Financial Decisions:

rk think "Should I invest in this startup?" --profile paranoid

Strategic Planning:

rk think "Should we pivot to B2B?" --profile balanced

📖 Related Documentation

- Use Cases - Real-world examples

- Profiles - Choose the right profile

- CLI Reference - Command reference

- FAQ - Common questions

🎓 Next Steps

After completing this path:

- Apply ReasonKit to your next major decision

- Share structured analyses with your team

- Build decision-making workflows around ReasonKit

Estimated Time: 2-3 hours

Difficulty:

Beginner to Intermediate

Prerequisites:

None - designed for non-technical users

Learning Path: Contributor

For: Developers who want to contribute code, documentation, or improvements to ReasonKit

This learning path guides you through contributing to the ReasonKit open source project.

🎯 Goal

Make your first contribution to ReasonKit and become an active contributor.

📚 Path Overview

Phase 1: Setup (30 minutes)

- Development Setup - Get the development environment running

- Architecture Overview - Understand the codebase structure

- Code Style - Learn ReasonKit’s coding standards

Outcome: You can build and run ReasonKit from source.

Phase 2: Quality Gates (30 minutes)

- Testing - Run and write tests

-

Quality Gates

- Understand the 5 mandatory gates

- Build:

cargo build --release - Lint:

cargo clippy -- -D warnings - Format:

cargo fmt --check - Test:

cargo test -

Bench:

cargo bench(no >5% regression)

- Build:

Outcome: You can verify your changes meet quality standards.

Phase 3: First Contribution (1-2 hours)

- Contributing Guidelines - How to contribute

- Pull Request Process - Submit your first PR

- Code Review Process - What to expect

Outcome: You’ve made your first contribution.

Phase 4: Deep Dive (2-4 hours)

- Architecture - Deep understanding of system design

- Custom ThinkTools - How ThinkTools work internally

- Performance Optimization - Performance best practices

- Rust Supremacy Doctrine - Why Rust-first

Outcome: You can contribute to core functionality.

🛠️ Development Workflow

Daily Development

# Clone repository

git clone https://github.com/reasonkit/reasonkit-core

cd reasonkit-core

# Build

cargo build --release

# Run tests

cargo test

# Run quality gates

./scripts/quality_metrics.sh

# Run benchmarks

cargo bench

Making Changes

-

Create a branch:

git checkout -b feature/your-feature-name -

Make changes following code style guidelines

-

Run quality gates:

cargo build --release cargo clippy -- -D warnings cargo fmt --check cargo test -

Commit with clear message:

git commit -m "feat: Add your feature description" -

Push and create PR:

git push origin feature/your-feature-name

📋 Contribution Areas

Good First Issues

- Documentation improvements

- Test coverage additions

- Bug fixes in non-critical paths

- CLI UX improvements

- Example scripts

Advanced Contributions

- New ThinkTool modules

- Performance optimizations

- LLM provider integrations

- Storage backend improvements

- Protocol engine enhancements

🎯 Quality Standards

All contributions must pass:

- ✅ Build - Compiles without errors

- ✅ Lint - No clippy warnings

- ✅ Format - Code formatted with rustfmt

- ✅ Test - All tests pass

- ✅ Bench - No performance regressions

Quality Score Target: 8.0/10 minimum

📖 Related Documentation

- Contributing Guidelines - How to contribute

- Development Setup - Environment setup

- Code Style - Coding standards

- CONTRIBUTING.md - Complete contributor guide

🎓 Next Steps

After completing this path:

- Find an issue that matches your skills

- Make your first contribution

- Join the Discord community

- Become a maintainer

Estimated Time: 4-7 hours

Difficulty:

Intermediate to Advanced

Prerequisites:

Rust programming experience, familiarity with Git

Quick Start

Get ReasonKit running in 30 seconds.

Installation

One-Liner (Recommended)

# Linux / macOS

curl -fsSL https://get.reasonkit.sh | bash

# Windows PowerShell

irm https://get.reasonkit.sh/windows | iex

Using Cargo (Rust)

cargo install reasonkit-core

Using uv (Python)

uv pip install reasonkit

From Source

git clone https://github.com/ReasonKit/reasonkit-core.git

cd reasonkit-core

cargo build --release

Set Up Your LLM Provider

ReasonKit needs an LLM to power its reasoning. Set your API key:

# Anthropic Claude (Recommended)

export ANTHROPIC_API_KEY="your-key-here"

# Or OpenAI

export OPENAI_API_KEY="your-key-here"

# Or use OpenRouter for 300+ models

export OPENROUTER_API_KEY="your-key-here"

Your First Analysis

# Ask a simple question

rk think "Should I buy this $200 gadget?"

# Use a specific profile (balanced is default)

rk think "Should I take this job offer?" --profile balanced

# Verify a claim with multiple sources

rk verify "The earth is flat" --sources 5

Understanding the Output

ReasonKit shows structured analysis:

╔══════════════════════════════════════════════════════════════╗

║ GIGATHINK: Exploring Perspectives ║

╠══════════════════════════════════════════════════════════════╣

│ 1. FINANCIAL: What's the total comp? 401k match? Equity? │

│ 2. CAREER: Where do people go after 2-3 years? │

│ 3. MANAGER: Your manager = 80% of job satisfaction │

│ ... │

╚══════════════════════════════════════════════════════════════╝

╔══════════════════════════════════════════════════════════════╗

║ LASERLOGIC: Checking Reasoning ║

╠══════════════════════════════════════════════════════════════╣

│ ASSUMPTION DETECTED: "Higher salary = better" │

│ HIDDEN VARIABLE: Cost of living in new location │

│ ... │

╚══════════════════════════════════════════════════════════════╝

Choosing a Profile

| Profile | Time | Best For |

|---|---|---|

--quick |

~10 sec | Daily decisions |

--balanced |

~20 sec | Important choices |

--deep |

~1 min | Major decisions |

--paranoid |

~2-3 min | High-stakes, can’t afford to be wrong |

Next Steps

- Web Sensing Guide - Give your agent eyes.

- Memory System - Enable long-term recall.

- Rust API - Build custom applications.

- Installation — Detailed installation options

- Your First Analysis — Walk through a real example

- ThinkTools Overview — Understand each tool

Installation

Get ReasonKit’s five ThinkTools for structured AI reasoning:

| Tool | Purpose | Use When |

|---|---|---|

| GigaThink | Expansive thinking, 10+ perspectives | Need creative solutions, brainstorming |

| LaserLogic | Precision reasoning, fallacy detection | Validating arguments, logical analysis |

| BedRock | First principles decomposition | Foundational decisions, axiom building |

| ProofGuard | Multi-source verification | Fact-checking, claim validation |

| BrutalHonesty | Adversarial self-critique | Reality checks, finding flaws |

Quick Install

Universal One-Liner (All Platforms)

Works on: Linux, macOS, Windows (WSL), FreeBSD

curl -fsSL https://get.reasonkit.sh | bash

The installer automatically:

- ✅ Detects your platform (Linux/macOS/Windows/WSL)

- ✅ Detects your shell (Bash/Zsh/Fish/Nu/PowerShell/Elvish)

- ✅ Chooses optimal installation path

- ✅ Configures PATH for your shell

- ✅ Installs Rust if needed

- ✅ Provides beautiful progress visualization

Windows (Native PowerShell)

irm https://get.reasonkit.sh/windows | iex

Shell-Specific Installation

The installer supports all major shells:

| Shell | Detection | PATH Setup | Completion |

|---|---|---|---|

| Bash | ✅ Auto | ✅ Auto | ✅ Available |

| Zsh | ✅ Auto | ✅ Auto | ✅ Available |

| Fish | ✅ Auto | ✅ Auto | ✅ Available |

| Nu (Nushell) | ✅ Auto | ✅ Auto | ⚠️ Manual |

| PowerShell | ✅ Auto | ✅ Auto | ⚠️ Manual |

| Elvish | ✅ Auto | ✅ Auto | ⚠️ Manual |

| tcsh/csh | ✅ Auto | ✅ Auto | ❌ None |

| ksh | ✅ Auto | ✅ Auto | ❌ None |

Prerequisites

- Git (for building from source)

- Rust 1.70+ (auto-installed if missing)

- An LLM API key (Anthropic, OpenAI, OpenRouter, or local Ollama)

Installation Methods

One-Liner (Recommended)

The installer auto-detects your OS and architecture:

# Linux/macOS

curl -fsSL https://get.reasonkit.sh | bash

# Windows PowerShell

irm https://get.reasonkit.sh/windows | iex

This will:

- Detect your platform (Linux/macOS/Windows/WSL/FreeBSD)

- Detect your shell (Bash/Zsh/Fish/Nu/PowerShell/Elvish)

- Install Rust if not present (via rustup)

- Build ReasonKit with beautiful progress visualization

- Configure PATH automatically for your shell

- Verify installation and show quick start guide

Installation paths:

-

macOS:

~/bin(or Homebrew path if available) - Linux:

~/.local/bin -

Windows (WSL):

~/.local/bin(works with Windows PATH integration) -

Windows (Native):

%LOCALAPPDATA%\ReasonKit\bin

Cargo

For Rust developers:

cargo install reasonkit-core

From Source

For development or customization:

git clone https://github.com/reasonkit/reasonkit-core

cd reasonkit-core

cargo build --release

./target/release/rk --help

Verify Installation

rk --version

# reasonkit-core 0.1.5

rk --help

LLM Provider Setup

ReasonKit requires an LLM provider. Choose one:

Anthropic Claude (Recommended)

Best quality reasoning:

export ANTHROPIC_API_KEY="sk-ant-..."

OpenAI

export OPENAI_API_KEY="sk-..."

OpenRouter (300+ Models)

Access to many models through one API:

export OPENROUTER_API_KEY="sk-or-..."

# Specify a model

rk think "question" --model anthropic/claude-3-opus

Google Gemini

export GOOGLE_API_KEY="..."

Groq (Fast Inference)

export GROQ_API_KEY="..."

Local Models (Ollama)

For privacy-sensitive use cases:

ollama serve

rk think "question" --provider ollama --model llama3

Quick Test

Try each ThinkTool:

# GigaThink - Get 10+ perspectives

rk think "Should I start a business?" --tool gigathink

# LaserLogic - Check reasoning

rk think "This investment guarantees 50% returns" --tool laserlogic

# BedRock - Find first principles

rk think "What makes a good leader?" --tool bedrock

# ProofGuard - Verify claims

rk think "Coffee causes cancer" --tool proofguard

# BrutalHonesty - Reality check

rk think "My startup idea is perfect" --tool brutalhonesty

Configuration File

Create ~/.config/reasonkit/config.toml:

[default]

provider = "anthropic"

model = "claude-3-sonnet-20240229"

profile = "balanced"

[providers.anthropic]

api_key_env = "ANTHROPIC_API_KEY"

[providers.openai]

api_key_env = "OPENAI_API_KEY"

model = "gpt-4-turbo-preview"

[output]

format = "pretty"

color = true

Docker

docker run -e ANTHROPIC_API_KEY=$ANTHROPIC_API_KEY \

ghcr.io/reasonkit/reasonkit-core \

think "Should I buy a house?"

Troubleshooting

“API key not found”

Make sure your API key is exported:

echo $ANTHROPIC_API_KEY # Should print your key

“Rate limited”

Use a different provider or wait. Consider OpenRouter for high volume.

“Model not available”

Check that your provider supports the requested model:

rk models list # Show available models

Next Steps

- Your First Analysis - Run your first ThinkTool

- Configuration - Customize behavior

- ThinkTools Overview - Deep dive into each tool

Your First Analysis

Let’s walk through a complete ReasonKit analysis step by step.

The Scenario

You’ve received a job offer. It pays 20% more than your current role, but requires relocating to a new city. You’re not sure whether to accept.

Running the Analysis

rk think "I received a job offer with 20% more salary but requires relocating to a new city. Should I take it?" --profile balanced

Understanding the Output

1. GigaThink: Exploring Perspectives

GIGATHINK: 10 Perspectives

1. FINANCIAL: 20% raise minus cost of living difference

2. CAREER: Is this a career accelerator or lateral move?

3. MANAGER: Who will you report to? Track record?

4. COMPANY: Growing, stable, or declining?

5. PERSONAL: How does your partner/family feel about relocating?

6. SOCIAL: What are you leaving behind? Friends, community?

7. RISK: What if this doesn't work out? Can you return?

8. OPPORTUNITY: Is this your best option or just the one in front of you?

9. TIMING: Is now the right time in your life for a major move?

10. GUT: When you imagine accepting, do you feel excited or anxious?

What to notice: GigaThink forces you to consider angles you might have missed while focused on the salary number.

2. LaserLogic: Checking Reasoning

LASERLOGIC: Hidden Assumptions

FLAW 1: "20% more salary = better"

→ Cost of living not factored in

→ $100K in Kansas City ≠ $100K in San Francisco

FLAW 2: "More money = happier"

→ Beyond $75K, correlation with happiness flattens

→ Other factors matter more at higher incomes

FLAW 3: "This opportunity won't come again"

→ Scarcity mindset may be causing rushed decision

→ Similar opportunities likely to arise

What to notice: LaserLogic catches the mental shortcuts you might have accepted uncritically.

3. BedRock: First Principles

BEDROCK: What Actually Matters

CORE QUESTION: Is this move aligned with your 5-year goals?

THE 80/20:

• Career growth potential (not just current salary)

• Quality of life in new location

• Reversibility if it doesn't work out

SIMPLIFIED DECISION FRAMEWORK:

If the role accelerates your career AND quality of life is acceptable

→ Strong yes

If it's a lateral move OR quality of life significantly worse

→ Probably no

What to notice: BedRock cuts through complexity to the essential question.

4. ProofGuard: Verifying Claims

PROOFGUARD: Fact Check

VERIFIED: Cost of living varies dramatically by city

→ San Francisco is 80% more expensive than national average

→ Your 20% raise might be a pay cut in real terms

VERIFIED: Job hopper stigma is decreasing

→ Average tenure is now 4.1 years (BLS, 2024)

→ Leaving after 1-2 years is increasingly common

TO VERIFY: Company's actual growth trajectory

→ Check their funding, revenue, layoff history

→ Ask for specific growth numbers in interview

What to notice: ProofGuard identifies claims worth verifying before deciding.

5. BrutalHonesty: Uncomfortable Truths

BRUTALHONESTY: Reality Check

UNCOMFORTABLE TRUTH 1:

You're probably overweighting the salary because it's quantifiable.

The harder-to-measure factors (manager quality, work-life balance)

often matter more for happiness.

UNCOMFORTABLE TRUTH 2:

Relocating is harder than you think.

Building new social connections takes years.

Most people underestimate the loneliness of a new city.

UNCOMFORTABLE TRUTH 3:

You might be running FROM something, not TO something.

Is there something about your current situation you're avoiding?

HONEST QUESTIONS:

• If the salary were the same, would you still want this move?

• Have you talked to people who work there (not recruiters)?

• What's your plan if this doesn't work out after 1 year?

What to notice: BrutalHonesty asks the questions you’ve been avoiding.

What to Do Next

Based on this analysis, you might:

-

Gather more information

- Calculate real cost-of-living adjusted salary

- Talk to people who work at the company

- Visit the new city before deciding

-

Ask better questions

- Why is this role open? Growth or replacement?

- What does the career path look like?

- What’s the team turnover like?

-

Negotiate better

- Armed with cost-of-living data, negotiate higher

- Ask for relocation assistance

- Negotiate a trial period if possible

-

Make a decision framework

- What would make this an obvious yes?

- What would make this an obvious no?

- Set a deadline to decide

Tips for Future Analyses

-

Be specific — “Job offer” is better than “career question”

-

Include context — Mention key constraints (timeline, family, etc.)

-

Use appropriate profile — Major decisions deserve

--deepor--paranoid -

Focus on BrutalHonesty — It’s usually the most valuable section

-

Action the insights — Analysis is only useful if it changes behavior

Next Steps

- ThinkTools Overview — Deep dive into each tool

- Profiles — Choose your analysis depth

- Use Cases — More decision examples

Configuration

ReasonKit can be configured via config file, environment variables, or CLI flags.

Configuration File

Create ~/.config/reasonkit/config.toml:

# Default settings

[default]

provider = "anthropic"

model = "claude-sonnet-4-20250514"

profile = "balanced"

output_format = "pretty"

# LLM Providers

[providers.anthropic]

api_key_env = "ANTHROPIC_API_KEY"

model = "claude-sonnet-4-20250514"

max_tokens = 8192

[providers.openai]

api_key_env = "OPENAI_API_KEY"

model = "gpt-4o"

max_tokens = 8192

[providers.openrouter]

api_key_env = "OPENROUTER_API_KEY"

default_model = "anthropic/claude-sonnet-4"

[providers.ollama]

base_url = "http://localhost:11434"

model = "llama3"

# Output settings

[output]

format = "pretty" # pretty, json, markdown

color = true

show_timing = true

show_tokens = false

# ThinkTool configurations

[thinktools.gigathink]

min_perspectives = 10

include_contrarian = true

[thinktools.laserlogic]

fallacy_detection = true

assumption_analysis = true

show_math = true

[thinktools.bedrock]

decomposition_depth = 3

show_80_20 = true

[thinktools.proofguard]

min_sources = 3

require_citation = true

source_tier_threshold = 3

[thinktools.brutalhonesty]

severity = "high"

include_alternatives = true

# Profile customization

[profiles.custom_quick]

tools = ["gigathink", "laserlogic"]

gigathink_perspectives = 5

timeout = 30

[profiles.custom_thorough]

tools = ["gigathink", "laserlogic", "bedrock", "proofguard", "brutalhonesty"]

gigathink_perspectives = 15

laserlogic_depth = "deep"

proofguard_sources = 5

timeout = 600

Environment Variables

# Required: Your LLM provider API key

export ANTHROPIC_API_KEY="sk-ant-..."

export OPENAI_API_KEY="sk-..."

export OPENROUTER_API_KEY="sk-or-..."

export GOOGLE_API_KEY="..."

export GROQ_API_KEY="gsk_..."

# Optional: Defaults

export RK_PROVIDER="anthropic"

export RK_MODEL="claude-sonnet-4-20250514"

export RK_PROFILE="balanced"

export RK_OUTPUT_FORMAT="pretty"

# Optional: Logging

export RK_LOG_LEVEL="info" # debug, info, warn, error

export RK_LOG_FILE="~/.local/share/reasonkit/logs/rk.log"

CLI Flags

CLI flags override config file and environment variables:

# Provider and model

rk think "question" --provider anthropic --model claude-3-opus-20240229

# Profile

rk think "question" --profile deep

# Output format

rk think "question" --format json

# Specific tool settings

rk think "question" --min-perspectives 15 --min-sources 5

# Timeout

rk think "question" --timeout 300

# Verbosity

rk think "question" --verbose

rk think "question" --quiet

Configuration Precedence

- CLI flags (highest priority)

- Environment variables

- Config file

- Built-in defaults (lowest priority)

Provider-Specific Configuration

Anthropic Claude

[providers.anthropic]

api_key_env = "ANTHROPIC_API_KEY"

model = "claude-sonnet-4-20250514"

max_tokens = 8192

temperature = 0.7

Available models:

claude-opus-4-20250514(most capable)-

claude-sonnet-4-20250514(balanced, recommended) claude-haiku-3-5-20250514(fastest)

OpenAI

[providers.openai]

api_key_env = "OPENAI_API_KEY"

model = "gpt-4o"

max_tokens = 8192

temperature = 0.7

Available models:

gpt-4o(most capable)gpt-4o-mini(fast, cost-effective)o1(reasoning-optimized)

Google Gemini

[providers.google]

api_key_env = "GOOGLE_API_KEY"

model = "gemini-2.0-flash"

Groq (Fast Inference)

[providers.groq]

api_key_env = "GROQ_API_KEY"

model = "llama-3.3-70b-versatile"

OpenRouter

[providers.openrouter]

api_key_env = "OPENROUTER_API_KEY"

default_model = "anthropic/claude-sonnet-4"

300+ models available. See openrouter.ai/models.

Ollama (Local)

[providers.ollama]

base_url = "http://localhost:11434"

model = "llama3"

Run ollama list to see available models.

Custom Profiles

Create custom profiles for common use cases:

[profiles.career]

# Optimized for career decisions

tools = ["gigathink", "laserlogic", "brutalhonesty"]

gigathink_perspectives = 12

laserlogic_depth = "deep"

brutalhonesty_severity = "high"

[profiles.fact_check]

# Optimized for verifying claims

tools = ["laserlogic", "proofguard"]

proofguard_sources = 5

proofguard_require_citation = true

[profiles.quick_sanity]

# Fast sanity check

tools = ["gigathink", "brutalhonesty"]

gigathink_perspectives = 5

timeout = 30

Use custom profiles:

rk think "Should I take this job?" --profile career

Output Configuration

Pretty (Default)

[output]

format = "pretty"

color = true

box_style = "rounded" # rounded, sharp, ascii

JSON

[output]

format = "json"

pretty_print = true

Markdown

[output]

format = "markdown"

include_metadata = true

Logging

[logging]

level = "info" # debug, info, warn, error

file = "~/.local/share/reasonkit/logs/rk.log"

rotate = true

max_size = "10MB"

Validating Configuration

# Check config is valid

rk config validate

# Show effective config

rk config show

# Show config file path

rk config path

Next Steps

- CLI Reference — Full command documentation

- Custom ThinkTools — Create your own tools

ThinkTools Overview

ThinkTools are specialized reasoning modules that catch specific types of oversight in AI analysis.

Why ThinkTools Matter: Research from NeurIPS 2023 demonstrates that Tree-of-Thoughts reasoning (divergent exploration) achieves 74% success rate compared to just 4% for Chain-of-Thought (sequential step-by-step) on complex reasoning tasks. ThinkTools implement this proven methodology.

The Five Core ThinkTools

| Tool | Purpose | Blind Spot It Catches |

|---|---|---|

| GigaThink | Explore all angles | Perspectives you forgot |

| LaserLogic | Check reasoning | Flawed logic in cliches |

| BedRock | First principles | Simple answers under complexity |

| ProofGuard | Verify claims | “Facts” that aren’t true |

| BrutalHonesty | See blind spots | Gap between plan and reality |

How They Work Together

The ThinkTools follow a designed sequence:

┌─────────────────────────────────────────────────────────────┐

│ THE 5-STEP PROCESS │

├─────────────────────────────────────────────────────────────┤

│ │

│ 1. DIVERGE → Explore all possibilities first │

│ (GigaThink) Don't narrow too early │

│ │

│ 2. CONVERGE → Check logic, find flaws │

│ (LaserLogic) Question assumptions │

│ │

│ 3. GROUND → Strip to first principles │

│ (BedRock) What actually matters? │

│ │

│ 4. VERIFY → Check facts against sources │

│ (ProofGuard) Triangulate claims │

│ │

│ 5. CUT → Attack your own work │

│ (BrutalHonesty) Find the uncomfortable truths │

│ │

└─────────────────────────────────────────────────────────────┘

The Cost of Wrong Decisions: Without structured reasoning, decisions lead to financial loss and missed opportunities. ThinkTools catch errors early and prevent costly mistakes before they compound.

Why This Sequence?

The order is deliberate:

- Divergent → Convergent: Explore widely before focusing

- Abstract → Concrete: From ideas to principles to evidence

- Constructive → Destructive: Build up, then attack

Using Individual Tools

You can invoke any tool directly:

# Just explore perspectives

rk gigathink "Should I start a business?"

# Just check logic

rk laserlogic "Renting is throwing money away"

# Just find first principles

rk bedrock "How do I get healthier?"

# Just verify a claim

rk proofguard "You should drink 8 glasses of water a day"

# Just get brutal honesty

rk brutalhonesty "I want to start a YouTube channel"

Using PowerCombo

PowerCombo runs all five tools in sequence:

# Full analysis

rk think "Should I take this job offer?" --profile balanced

The profile determines how thorough each tool’s analysis is.

Tool Configurations

Each tool can be configured:

# ~/.config/reasonkit/config.toml

[thinktools.gigathink]

min_perspectives = 10

include_contrarian = true

[thinktools.laserlogic]

fallacy_detection = true

assumption_analysis = true

[thinktools.bedrock]

decomposition_depth = 3

[thinktools.proofguard]

min_sources = 3

require_citation = true

[thinktools.brutalhonesty]

severity = "high" # low, medium, high

Next Steps

Dive deep into each tool:

- GigaThink — Multi-perspective analysis

- LaserLogic — Logic and assumption checking

- BedRock — First principles decomposition

- ProofGuard — Claim verification

- BrutalHonesty — Adversarial self-critique

- PowerCombo — All tools in sequence

GigaThink

See All The Angles

GigaThink catches the perspectives you forget when you’re excited about an opportunity or focused on one aspect of a decision.

The Blind Spot

You’re only thinking about a decision from one angle. When we’re excited or stressed, we tend to focus narrowly and miss important perspectives.

Example

Question: “Should I take this job offer?”

Without GigaThink:

“Consider salary, benefits, and culture fit.”

With GigaThink:

10 PERSPECTIVES YOU MIGHT MISS:

1. FINANCIAL: What's the TOTAL comp? 401k match? Equity?

→ Base salary is only part of the picture

2. CAREER TRAJECTORY: Where do people go after 2-3 years?

→ This job is a stepping stone. Where does it lead?

3. MANAGER QUALITY: Your manager = 80% of job satisfaction

→ Who will you report to? What's their reputation?

4. TEAM HEALTH: Why is this role open? Growth or replacement?

→ High turnover = red flag

5. COMPANY TRAJECTORY: Growing, stable, or declining?

→ Joining a sinking ship vs. rocket ship matters

6. WORK-LIFE REALITY: Glassdoor hours vs stated hours

→ "Flexible hours" sometimes means "always working"

7. LEARNING VELOCITY: Will you learn more here?

→ Stagnation kills careers

8. YOUR LEVERAGE: Do they NEED you or just WANT you?

→ Negotiate from strength

9. OPPORTUNITY COST: Is this your best option or first option?

→ Keep looking? Wait for better?

10. GUT CHECK: When you imagine accepting, relief or dread?

→ Your intuition knows something

Usage

CLI

# Direct invocation

rk gigathink "Should I start a business?"

# With options

rk gigathink "Career change?" --min-perspectives 15

Rust API

#![allow(unused)]

fn main() {

use reasonkit::thinktools::GigaThink;

let gigathink = GigaThink::new()

.min_perspectives(10)

.include_contrarian(true);

let result = gigathink.analyze("Should I take this job offer?").await?;

for perspective in result.perspectives {

println!("{}: {}", perspective.category, perspective.insight);

}

}Python

from reasonkit import GigaThink

gt = GigaThink(min_perspectives=10)

result = gt.analyze("Should I take this job offer?")

for p in result.perspectives:

print(f"{p.category}: {p.insight}")

Configuration

[thinktools.gigathink]

# Minimum number of perspectives to generate

min_perspectives = 10

# Include deliberately contrarian perspectives

include_contrarian = true

# Categories to always include

required_categories = [

"financial",

"career",

"personal",

"risk",

"opportunity_cost"

]

# Maximum perspectives (to avoid analysis paralysis)

max_perspectives = 20

Output Format

{

"tool": "gigathink",

"query": "Should I take this job offer?",

"perspectives": [

{

"category": "financial",

"label": "TOTAL COMPENSATION",

"insight": "What's the 401k match? Equity vesting schedule? Bonus structure?",

"questions": [

"What's the full compensation package?",

"How does equity vest?"

]

}

],

"meta": {

"perspective_count": 10,

"categories_covered": [

"financial",

"career",

"personal",

"risk",

"opportunity"

],

"contrarian_included": true

}

}

Best Practices

-

Don’t stop at the obvious — The first 3-4 perspectives are usually obvious. The value is in perspectives 5-10.

-

Question the frame — GigaThink might reveal that you’re asking the wrong question entirely.

-

Note discomfort — If a perspective makes you uncomfortable, that’s often the most important one.

-

Use for big decisions — GigaThink shines for career, financial, and relationship decisions.

Related

- LaserLogic — After exploring angles, check your reasoning

- PowerCombo — Run all five tools in sequence

LaserLogic

Spot Bad Reasoning

LaserLogic catches arguments that sound wise but hide flawed logic. It exposes hidden assumptions and logical fallacies.

The Blind Spot

Arguments that sound wise but hide flawed logic. “Common wisdom” often contains false equivalences, missing variables, and unexamined assumptions.

Example

Claim: “Renting is throwing money away”

Without LaserLogic:

“Building equity is generally better long-term.”

With LaserLogic:

HIDDEN ASSUMPTIONS EXPOSED:

1. FALSE EQUIVALENCE

Rent = 100% goes to housing (you get shelter)

Mortgage = 60-80% goes to INTEREST (also "thrown away")

→ Early mortgage payments are mostly interest, not equity

2. MISSING VARIABLES

- Down payment could be invested in S&P 500 (7-10% annual return)

- Transaction costs: 6% realtor fees when selling

- Maintenance: 1-2% of home value annually

- Property taxes: ongoing cost that renters don't pay

- Insurance: typically higher for owners

- Opportunity cost of capital tied up in house

3. ASSUMES APPRECIATION

"Houses always go up" — ask anyone who bought in 2007

→ Real estate is local and cyclical

4. IGNORES FLEXIBILITY

Rent: 30 days to leave

Own: 6+ months to sell, 6% transaction costs

→ Flexibility has economic value

5. SURVIVORSHIP BIAS

You hear from people who made money on houses

You don't hear from people who lost money

VERDICT: "Renting is throwing money away" is OVERSIMPLIFIED

Breakeven typically requires 5-7 years in same location.

The right answer depends on your specific situation.

Usage

CLI

# Direct invocation

rk laserlogic "Renting is throwing money away"

# Check specific argument

rk laserlogic "You should follow your passion" --check-fallacies

Rust API

#![allow(unused)]

fn main() {

use reasonkit::thinktools::LaserLogic;

let laser = LaserLogic::new()

.check_fallacies(true)

.check_assumptions(true);

let result = laser.analyze("Renting is throwing money away").await?;

for flaw in result.flaws {

println!("{}: {}", flaw.category, flaw.explanation);

}

}Fallacy Detection

LaserLogic identifies common logical fallacies:

| Fallacy | Description | Example |

|---|---|---|

| False equivalence | Treating unlike things as equal | “Rent = waste, mortgage = investment” |

| Missing variables | Ignoring relevant factors | Ignoring maintenance costs |

| Survivorship bias | Only seeing successes | “My friend got rich from real estate” |

| Sunk cost fallacy | Over-valuing past investment | “I’ve spent too much to quit now” |

| Appeal to authority | Trusting credentials over logic | “Experts say…” |

| Hasty generalization | Too few examples | “Everyone I know…” |

| False dichotomy | Only two options when more exist | “Buy or rent” (ignore: rent and invest) |

Configuration

[thinktools.laserlogic]

# Check for logical fallacies

fallacy_detection = true

# Analyze hidden assumptions

assumption_analysis = true

# Show mathematical breakdowns where applicable

show_math = true

# Severity threshold (0.0 - 1.0)

min_severity = 0.3

Output Format

{

"tool": "laserlogic",

"claim": "Renting is throwing money away",

"flaws": [

{

"category": "false_equivalence",

"severity": 0.8,

"description": "Treating rent and mortgage interest as different",

"explanation": "Early mortgage payments are 60-80% interest",

"counter": "Both rent and interest provide shelter value"

}

],

"verdict": {

"classification": "oversimplified",

"confidence": 0.85,

"nuance": "True under specific conditions (5-7 year horizon, stable location)"

}

}

Best Practices

-

Question cliches — If advice is short and catchy, it’s probably oversimplified.

-

Follow the math — Many “wisdom” claims fall apart when you do the actual calculation.

-

Ask “compared to what?” — Every choice has an alternative. What’s being compared?

-

Look for hidden variables — What factors is the argument ignoring?

Common Myths LaserLogic Exposes

- “A college degree always pays off”

- “You can’t time the market”

- “Networking is everything”

- “Hard work always pays off”

- “Early is always better than late”

Related

- GigaThink — First explore angles, then check logic

- ProofGuard — After checking logic, verify facts

BedRock

Find the Simple Answer

BedRock cuts through complexity to find first principles. It identifies what actually matters when you’re overwhelmed with options.

The Blind Spot

Overwhelmed with options, missing what actually matters. Information overload leads to analysis paralysis and missing the simple truth.

Example

Question: “How do I get healthier?”

Without BedRock:

“Consider diet, exercise, sleep, stress management, supplements, biohacking, intermittent fasting, cold exposure, meditation…”

With BedRock:

FIRST PRINCIPLES ANALYSIS:

What actually moves the needle? (Research consensus)

1. SLEEP: 7-9 hours

→ Most ignored, highest impact

→ Affects hormones, recovery, decision-making

→ Foundation for everything else

2. MOVEMENT: 150 min/week moderate OR 75 min vigorous

→ Doesn't need to be fancy

→ Walking counts

3. NUTRITION: Mostly plants, enough protein, not too much

→ The specifics matter less than the basics

→ Most diets work by reducing total calories

═══════════════════════════════════════════════════════════════

THE 80/20 ANSWER:

If you do ONLY these three things:

1. Sleep 7+ hours (non-negotiable)

2. Walk 30 min daily

3. Eat one vegetable with every meal

→ You'll be healthier than 80% of people.

Everything else (supplements, biohacking, specific diets)

is optimization on top of these basics.

═══════════════════════════════════════════════════════════════

THE UNCOMFORTABLE TRUTH:

You probably already know what to do.

The problem isn't information, it's execution.

The question isn't "how do I get healthier?"

The question is "what's stopping me from doing what I already know?"

Usage

CLI

# Direct invocation

rk bedrock "How do I get healthier?"

# With depth level

rk bedrock "How do I build a business?" --depth 3

Rust API

#![allow(unused)]

fn main() {

use reasonkit::thinktools::BedRock;

let bedrock = BedRock::new()

.decomposition_depth(3)

.show_80_20(true);

let result = bedrock.analyze("How do I get healthier?").await?;

println!("Core principles:");

for principle in result.first_principles {

println!("- {}: {}", principle.name, principle.description);

}

println!("\n80/20 answer:\n{}", result.pareto_answer);

}First Principles Method

BedRock follows a structured decomposition:

1. DECOMPOSE

Break the question into fundamental components

"Health" → Physical, Mental, Longevity

2. EVIDENCE CHECK

What does research actually say?

Filter signal from noise

3. PARETO ANALYSIS

What 20% of actions give 80% of results?

Find the vital few

4. UNCOMFORTABLE TRUTH

What does the questioner already know but avoid?

Address the real blocker

Configuration

[thinktools.bedrock]

# How deep to decompose (1-5)

decomposition_depth = 3

# Include 80/20 analysis

show_80_20 = true

# Include uncomfortable truths

include_uncomfortable_truth = true

# Require research backing

require_evidence = true

Output Format

{

"tool": "bedrock",

"query": "How do I get healthier?",

"first_principles": [

{

"name": "Sleep",

"priority": 1,

"evidence_level": "strong",

"description": "7-9 hours is foundation for everything else",

"why_first": "Affects hormones, recovery, decision-making"

}

],

"pareto_answer": {

"actions": ["Sleep 7+ hours", "Walk 30 min daily", "Eat vegetables"],

"expected_impact": "Better than 80% of population"

},

"uncomfortable_truth": {

"insight": "You probably already know what to do",

"real_question": "What's stopping you from doing what you already know?"

}

}

When to Use BedRock

BedRock is most valuable when:

- Information overload — Too many options, too many opinions

- Analysis paralysis — Can’t decide because of complexity

- Seeking the “best” approach — When “good enough” is actually optimal

- Recurring problems — When you keep revisiting the same issue

The 80/20 Principle

BedRock applies Pareto’s principle:

- 20% of actions give 80% of results

- The “best” solution is often worse than “good enough done consistently”

- Complexity is often a form of procrastination

Related

- LaserLogic — Check the logic of your simplified approach

- BrutalHonesty — Face the uncomfortable truth

ProofGuard

Verify Before You Believe

ProofGuard catches widely-believed “facts” that aren’t actually true. It triangulates claims against multiple sources.

The Blind Spot

Acting on “facts” you never verified. Many commonly accepted beliefs have no scientific basis or are misunderstood.

Example

Claim: “You should drink 8 glasses of water a day”

Without ProofGuard:

“Yes, staying hydrated is important. Drink plenty of water.”

With ProofGuard:

CLAIM VERIFICATION:

"You should drink 8 glasses of water a day"

═══════════════════════════════════════════════════════════════

SOURCE 1: British Medical Journal (2007)

"Drink at least eight glasses of water a day. Really?"

→ "No scientific evidence for 8x8 recommendation"

→ Origin traced to 1945 Food and Nutrition Board misinterpretation

→ The original recommendation included water from food

SOURCE 2: Mayo Clinic (2022)

→ "Adequate daily fluid intake is about 15.5 cups for men, 11.5 for women"

→ This is TOTAL fluids (includes food), not just water

→ "Most healthy people can stay hydrated by drinking water when thirsty"

SOURCE 3: National Academy of Sciences (2004)

"Dietary Reference Intakes for Water"

→ "Most people meet hydration needs through normal thirst"

→ No evidence of widespread dehydration in general population

→ Urine color is a better indicator than counting glasses

═══════════════════════════════════════════════════════════════

CROSS-REFERENCE ANALYSIS:

✓ All three sources agree: 8x8 has no scientific basis

✓ All three sources agree: thirst is generally reliable

✓ All three sources agree: food provides significant water

═══════════════════════════════════════════════════════════════

VERDICT: MOSTLY MYTH

• "8 glasses" has no scientific basis

• Food provides 20-30% of water intake

• Coffee/tea count toward hydration (mild diuretic effect is offset)

• Your body has a hydration sensor: thirst

• Overhydration (hyponatremia) is actually more dangerous than mild dehydration

PRACTICAL TRUTH:

Drink when thirsty. Check urine color (pale yellow = good).

No need to count glasses.

Usage

CLI

# Direct invocation

rk proofguard "You should drink 8 glasses of water a day"

# Require specific number of sources

rk proofguard "Breakfast is the most important meal" --min-sources 3

Rust API

#![allow(unused)]

fn main() {

use reasonkit::thinktools::ProofGuard;

let proofguard = ProofGuard::new()

.min_sources(3)

.require_citation(true);

let result = proofguard.verify("8 glasses of water a day").await?;

println!("Verdict: {:?}", result.verdict);

for source in result.sources {

println!("- {}: {}", source.name, source.finding);

}

}Source Tiers

ProofGuard prioritizes sources by reliability:

| Tier | Source Type | Weight |

|---|---|---|

| 1 | Peer-reviewed journals, meta-analyses | 1.0 |

| 2 | Government health agencies (CDC, NHS) | 0.9 |

| 3 | Major medical institutions (Mayo, Cleveland) | 0.8 |

| 4 | Established news with citations | 0.5 |

| 5 | Uncited claims, social media | 0.1 |

Verification Method

1. IDENTIFY CLAIM

Extract the specific, falsifiable claim

2. MULTI-SOURCE SEARCH

Find 3+ independent sources

Prioritize Tier 1-2 sources

3. TRIANGULATION

Do sources agree or conflict?

What's the consensus?

4. ORIGIN TRACE

Where did this claim originate?

Is it misquoted or out of context?

5. VERDICT

True / False / Partially True / Myth / Nuanced

Configuration

[thinktools.proofguard]

# Minimum sources required

min_sources = 3

# Require citations to be verified

require_citation = true

# Include origin tracing

trace_origin = true

# Source tier threshold (1-5)

min_source_tier = 3

Output Format

{

"tool": "proofguard",

"claim": "You should drink 8 glasses of water a day",

"sources": [

{

"name": "British Medical Journal",

"year": 2007,

"tier": 1,

"finding": "No scientific evidence for 8x8 recommendation",

"url": "https://..."

}

],

"triangulation": {

"agreement": "strong",

"conflicts": null

},

"origin": {

"traced_to": "1945 Food and Nutrition Board",

"misinterpretation": "Original included water from food"

},

"verdict": {

"classification": "myth",

"confidence": 0.9,

"nuance": "Thirst is generally reliable; no need to count glasses"

}

}

Common Myths ProofGuard Exposes

- “Breakfast is the most important meal of the day”

- “We only use 10% of our brains”

- “Sugar makes kids hyperactive”

- “You need 10,000 steps per day”

- “Cracking knuckles causes arthritis”

- “Reading in dim light damages your eyes”

ProofLedger Anchoring

For auditable verification, ProofGuard can anchor verified claims to a cryptographic ProofLedger.

CLI Usage

# Verify and anchor a claim

rk verify "The speed of light is 299,792,458 m/s" --anchor

# Uses SQLite with SHA-256 hashing for immutable records

# Each anchor includes: claim, sources, timestamp, content hash

Rust API

#![allow(unused)]

fn main() {

use reasonkit::verification::ProofLedger;

let ledger = ProofLedger::new("./proofledger.db")?;

// Anchor a verified claim

let hash = ledger.anchor(

"Speed of light is 299,792,458 m/s",

"https://physics.nist.gov/cgi-bin/cuu/Value?c",

Some(r#"{"verified": true, "sources": 3}"#.to_string()),

)?;

// Later: verify the anchor still matches

let valid = ledger.verify(&hash)?;

}Ledger Output

{

"id": 1,

"claim": "Speed of light is 299,792,458 m/s",

"source_url": "https://physics.nist.gov/cgi-bin/cuu/Value?c",

"content_hash": "a3b2c1...",

"anchored_at": "2025-01-15T10:30:00Z",

"metadata": { "verified": true, "sources": 3 }

}

This creates an immutable audit trail for compliance and reproducibility.

Best Practices

-

Question “everyone knows” claims — The more universal a belief, the more worth verifying

-

Trace origins — Many myths start from misquoted studies or marketing

-

Check for conflicts of interest — Who benefits from this claim?

-

Update beliefs — Science changes; what was “known” 20 years ago may be wrong

-

Use anchoring for critical claims — Create permanent records for auditable decisions

Related

- LaserLogic — Check the reasoning, not just the facts

- BrutalHonesty — Face inconvenient verified truths

BrutalHonesty

See Your Blind Spots

BrutalHonesty catches the gap between your optimistic plan and reality. It tells you what you don’t want to hear—before reality does.

The Blind Spot

You love your plan and can’t see its flaws. Optimism bias makes us overestimate success and underestimate challenges.

Example

Statement: “I’m going to start a YouTube channel as a side hustle”

Without BrutalHonesty:

“Focus on a niche and be consistent!”

With BrutalHonesty:

UNCOMFORTABLE TRUTHS:

═══════════════════════════════════════════════════════════════

1. THE MATH DOESN'T WORK FOR 99%

• Median YouTuber income: $0

• Top 3% of channels get 90% of views

• Ad revenue: ~$3-5 per 1,000 views

• To make €5K/year: need 1-2 million views/year

• That's 30,000+ views per DAY, every day

═══════════════════════════════════════════════════════════════

2. TIME INVESTMENT IS MASSIVE

• 1 quality video = 10-40 hours

- Research: 2-5 hours

- Scripting: 2-5 hours

- Filming: 2-8 hours

- Editing: 4-20 hours

- Thumbnail/title: 1-2 hours

• That's a part-time job for months with zero return

• "Consistency" means this schedule EVERY WEEK

═══════════════════════════════════════════════════════════════

3. YOU'RE COMPETING WITH PROFESSIONALS

• MrBeast has a 100-person team

• Your "side hustle" competes with their full-time career

• Top creators work 60-80 hour weeks

• They have professional equipment, editors, writers

═══════════════════════════════════════════════════════════════

4. BURNOUT IS THE ACTUAL OUTCOME

• 95% of channels quit within first year

• Common pattern: excitement → grind → disappointment → quit

• Most channels never hit 1,000 subscribers

═══════════════════════════════════════════════════════════════

HONEST QUESTIONS:

• Can you commit 20+ hrs/week for 2 years with zero return?

• Why YouTube specifically? (Newsletter/podcast may be easier)

• Is this for money or creative expression? (Different strategies)

• What's your unique advantage? (Why would anyone watch YOU?)

• Have you made 10 videos already? (Most quit before 10)

═══════════════════════════════════════════════════════════════

IF YOU STILL WANT TO DO IT:

• Make 10 videos before "launching" (tests commitment)

• Treat it as hobby, not business, until proven

• Set a 6-month review point with specific metrics

• Have a "quit threshold" to avoid sunk cost fallacy

• Consider it successful if you enjoy the process, not the outcome

Usage

CLI

# Direct invocation

rk brutalhonesty "I'm going to start a YouTube channel"

# Adjust severity

rk brutalhonesty "I'm going to quit my job to write a novel" --severity high

Rust API

#![allow(unused)]

fn main() {

use reasonkit::thinktools::BrutalHonesty;

let bh = BrutalHonesty::new()

.severity(Severity::High)

.include_alternatives(true);

let result = bh.analyze("I'm starting a YouTube channel").await?;

println!("Uncomfortable truths:");

for truth in result.uncomfortable_truths {

println!("- {}", truth);

}

println!("\nHonest questions:");

for question in result.questions {

println!("- {}", question);

}

}Severity Levels

| Level | Description | Use Case |

|---|---|---|

| Low | Gentle pushback | Early exploration |

| Medium | Direct feedback | Normal decisions |

| High | No-holds-barred | High-stakes, need reality |

The BrutalHonesty Method

1. STATISTICAL REALITY

What do the actual numbers say?

Base rates, not anecdotes

2. COMPETITION ANALYSIS

Who are you actually competing against?

What's their unfair advantage?

3. TIME/EFFORT AUDIT

What's the true time investment?

Opportunity cost calculation

4. FAILURE MODE MAPPING

How do most attempts like this fail?

What's the most likely outcome?

5. HONEST QUESTIONS

Questions that force confrontation with reality

What you'd ask a friend in this situation

6. CONDITIONAL ADVICE

"If you still want to do this..."

How to approach it wisely

Configuration

[thinktools.brutalhonesty]

# Severity level: low, medium, high

severity = "high"

# Include alternative suggestions

include_alternatives = true

# Include conditional advice (if they proceed)

include_conditional = true

# Base rate lookup

use_statistics = true

Output Format

{

"tool": "brutalhonesty",

"plan": "Start a YouTube channel as a side hustle",

"uncomfortable_truths": [

{

"category": "math",

"truth": "Median YouTuber income is $0",

"evidence": "Top 3% get 90% of views"

}

],

"questions": [

"Can you commit 20+ hrs/week for 2 years with zero return?",

"Why YouTube specifically?"

],

"base_rates": {

"success_rate": 0.01,

"quit_rate_year_1": 0.95,

"median_income": 0

},

"conditional_advice": [

"Make 10 videos before launching",

"Treat as hobby until proven",

"Set a 6-month review point"

]

}

Common Plans BrutalHonesty Scrutinizes

- “I’m going to become a content creator”

- “I’m going to start a business”

- “I’m going to write a book”

- “I’m going to become a day trader”

- “I’m going to become an influencer”

- “I’m going to drop out and code”

When to Use BrutalHonesty

- Before big commitments — Quitting job, major investment

- When excited — Excitement impairs judgment

- After being told “great idea!” — Friends are often too supportive

- Recurring ideas — If you keep revisiting, get honest

The Value of Honest Feedback

BrutalHonesty isn’t about discouragement. It’s about:

- Informed decisions — Know what you’re getting into

- Better planning — Address challenges before they arise

- Appropriate expectations — Success metrics that make sense

- Early pivots — Recognize bad paths before sunk costs accumulate

Related

PowerCombo

All Five Tools in Sequence

Research Foundation: PowerCombo implements Tree-of-Thoughts reasoning, which achieved 74% success rate vs 4% for Chain-of-Thought on complex reasoning benchmarks (Yao et al., NeurIPS 2023). This 18.5x improvement demonstrates why structured, multi-path exploration beats linear sequential thinking.

PowerCombo runs all five ThinkTools in the optimal sequence for comprehensive analysis.

The 5-Step Process

┌─────────────────────────────────────────────────────────────┐

│ POWERCOMBO │

├─────────────────────────────────────────────────────────────┤

│ │

│ 1. GigaThink → Explore all angles │

│ Cast a wide net first │

│ │

│ 2. LaserLogic → Check the reasoning │

│ Find logical flaws │

│ │

│ 3. BedRock → Find first principles │

│ Cut to what matters │

│ │

│ 4. ProofGuard → Verify the facts │

│ Triangulate claims │

│ │

│ 5. BrutalHonesty → Face uncomfortable truths │

│ Attack your own conclusions │

│ │

└─────────────────────────────────────────────────────────────┘

Why This Order?

The sequence is deliberate:

-

Divergent → Convergent

- First explore widely (GigaThink)

- Then narrow ruthlessly (LaserLogic, BedRock)

-

Abstract → Concrete

- Start with ideas (GigaThink)

- Move to principles (BedRock)

- End with evidence (ProofGuard)

-

Constructive → Destructive

- Build up possibilities first

- Then attack your own work (BrutalHonesty)

Usage

CLI

# Run full analysis

rk think "Should I take this job offer?" --profile balanced

# Equivalent to:

rk powercombo "Should I take this job offer?" --profile balanced

With Profiles

| Profile | Time | Depth |

|---|---|---|

--quick |

~10 sec | Light pass on each tool |

--balanced |

~20 sec | Standard depth |

--deep |

~1 min | Thorough analysis |

--paranoid |

~2-3 min | Maximum scrutiny |

Rust API

#![allow(unused)]

fn main() {

use reasonkit::thinktools::PowerCombo;

use reasonkit::profiles::Profile;

let combo = PowerCombo::new()

.profile(Profile::Balanced);

let result = combo.analyze("Should I take this job offer?").await?;

// Access each tool's output

println!("GigaThink found {} perspectives", result.gigathink.perspectives.len());

println!("LaserLogic found {} flaws", result.laserlogic.flaws.len());

println!("BedRock principles: {:?}", result.bedrock.first_principles);

println!("ProofGuard verdict: {:?}", result.proofguard.verdict);

println!("BrutalHonesty truths: {:?}", result.brutalhonesty.uncomfortable_truths);

}Example Output

Question: “Should I buy a house?”

╔══════════════════════════════════════════════════════════════╗

║ POWERCOMBO ANALYSIS ║

║ Question: Should I buy a house? ║

║ Profile: balanced ║

╚══════════════════════════════════════════════════════════════╝

┌──────────────────────────────────────────────────────────────┐

│ GIGATHINK: Exploring Perspectives │

├──────────────────────────────────────────────────────────────┤

│ 1. FINANCIAL: Down payment, mortgage rates, total cost │

│ 2. LIFESTYLE: Stability vs. flexibility trade-off │

│ 3. CAREER: Does your job require mobility? │

│ 4. MARKET: Is this a good time/location to buy? │

│ 5. OPPORTUNITY: What else could you do with that money? │

│ 6. MAINTENANCE: Are you prepared for ongoing costs? │

│ 7. TIMELINE: How long will you stay? │

│ 8. EMOTIONAL: Ownership satisfaction vs. renting freedom │

└──────────────────────────────────────────────────────────────┘

┌──────────────────────────────────────────────────────────────┐

│ LASERLOGIC: Checking Reasoning │

├──────────────────────────────────────────────────────────────┤

│ FLAW: "Renting is throwing money away" │

│ → Mortgage interest is also "thrown away" │

│ → Early payments are 60-80% interest │

│ │

│ FLAW: "Houses always appreciate" │

│ → Real estate is local and cyclical │

│ → 2007-2012 counterexample │

└──────────────────────────────────────────────────────────────┘

┌──────────────────────────────────────────────────────────────┐

│ BEDROCK: First Principles │

├──────────────────────────────────────────────────────────────┤

│ CORE QUESTION: Will you be in the same place for 5-7 years?│

│ │

│ THE 80/20: │

│ • Breakeven on transaction costs: 5-7 years │

│ • If yes to stability → buying can make sense │

│ • If no/uncertain → renting is financially rational │

└──────────────────────────────────────────────────────────────┘

┌──────────────────────────────────────────────────────────────┐

│ PROOFGUARD: Fact Check │

├──────────────────────────────────────────────────────────────┤

│ VERIFIED: Transaction costs are 6-10% (realtor, closing) │

│ VERIFIED: Average homeowner stays 13 years (NAR, 2024) │

│ VERIFIED: Maintenance averages 1-2% of home value/year │

└──────────────────────────────────────────────────────────────┘

┌──────────────────────────────────────────────────────────────┐

│ BRUTALHONESTY: Uncomfortable Truths │

├──────────────────────────────────────────────────────────────┤

│ • You're asking because you want validation, not analysis │

│ • "Investment" framing obscures lifestyle preferences │

│ • Most people decide emotionally, then justify rationally │

│ │

│ HONEST QUESTION: │

│ If rent and buy were exactly equal financially, │

│ which would you choose? That's your real preference. │

└──────────────────────────────────────────────────────────────┘

═══════════════════════════════════════════════════════════════

SYNTHESIS:

The buy-vs-rent decision depends primarily on timeline.

If staying 5-7+ years in one location: buying can make sense.

If uncertain or likely to move: renting is financially rational.

Most "rent is throwing money away" arguments are oversimplified.

Configuration

[thinktools.powercombo]

# Tools to include (default: all)

tools = ["gigathink", "laserlogic", "bedrock", "proofguard", "brutalhonesty"]

# Order (default: standard)

order = "standard" # or "custom"

# Include synthesis at end

include_synthesis = true

Output Formats

# Pretty terminal output (default)

rk think "question" --format pretty

# JSON for programmatic use

rk think "question" --format json

# Markdown for documentation

rk think "question" --format markdown

Best Practices

-

Use profiles appropriately — Quick for small decisions, paranoid for major ones

-

Read all sections — Each tool catches different things

-

Focus on BrutalHonesty — It’s often the most valuable

-

Use the synthesis — The combined insight is greater than parts

Related

- Profiles Overview — Choose your depth

- Individual tools: GigaThink, LaserLogic, BedRock, ProofGuard, BrutalHonesty

Reasoning Profiles

Match your analysis depth to your decision stakes.

Profiles are pre-configured tool combinations optimized for different use cases. Think of them as “presets” that balance thoroughness against time.

The Four Profiles

┌─────────────────────────────────────────────────────────────────────────┐

│ PROFILE SPECTRUM │

├─────────────────────────────────────────────────────────────────────────┤

│ │

│ QUICK BALANCED DEEP PARANOID │

│ │ │ │ │ │

│ 10s 20s 1min 2-3min │

│ │

│ "Should I "Should I "Should I "Should I │

│ buy this?" take this move invest my │

│ job?" cities?" life savings?" │

│ │

│ Low stakes Important Major life Can't afford │

│ Reversible decisions changes to be wrong │

│ │

└─────────────────────────────────────────────────────────────────────────┘

Profile Comparison

| Profile | Tools | Time | Best For |

|---|---|---|---|

| Quick | 2 | ~10s | Low stakes, reversible |

| Balanced | 5 | ~20s | Standard decisions |

| Deep | 5+ | ~1min | Major choices |

| Paranoid | All | ~2-3min | High stakes |

Choosing a Profile

Quick Profile

Use when:

- Decision is easily reversible

- Stakes are low

- Time is limited

- You just need a sanity check

Example: “Should I buy this $50 gadget?”

Balanced Profile (Default)

Use when:

- Important but not life-changing

- You have a few minutes

- Standard analysis depth is appropriate

Example: “Should I take this job offer?”

Deep Profile

Use when:

- Major life decision

- Long-term consequences

- Multiple stakeholders affected

- You want thorough analysis

Example: “Should I move to a new city?”

Paranoid Profile

Use when:

- Cannot afford to be wrong

- Very high stakes

- Need maximum verification

- Irreversible consequences

Example: “Should I invest my life savings?”

Profile Details

Tool Inclusion by Profile

| Tool | Quick | Balanced | Deep | Paranoid |

|---|---|---|---|---|

| 💡 GigaThink | ✓ | ✓ | ✓ | ✓ |

| ⚡ LaserLogic | ✓ | ✓ | ✓ | ✓ |

| 🪨 BedRock | - | ✓ | ✓ | ✓ |

| 🛡️ ProofGuard | - | ✓ | ✓ | ✓ |

| 🔥 BrutalHonesty | - | ✓ | ✓ | ✓ |

Pro Tip: ReasonKit Pro adds HighReflect (meta-cognition) and RiskRadar (threat assessment) for even deeper analysis.

Depth Settings by Profile

| Setting | Quick | Balanced | Deep | Paranoid |

|---|---|---|---|---|

| GigaThink perspectives | 5 | 10 | 15 | 20 |

| LaserLogic depth | light | standard | deep | exhaustive |

| ProofGuard sources | - | 3 | 5 | 7 |

| BrutalHonesty severity | - | medium | high | maximum |

Usage

# Explicit profile

rk think "question" --profile balanced

# Shorthand

rk think "question" --quick

rk think "question" --balanced

rk think "question" --deep

rk think "question" --paranoid

Custom Profiles

You can create custom profiles in your config file:

[profiles.my_profile]

tools = ["gigathink", "laserlogic", "proofguard"]

gigathink_perspectives = 8

laserlogic_depth = "deep"

proofguard_sources = 4

timeout = 120

See Custom Profiles for details.

Cost Implications

More thorough profiles use more tokens:

| Profile | ~Tokens | Claude Cost | GPT-4 Cost |

|---|---|---|---|

| Quick | 2K | ~$0.02 | ~$0.06 |

| Balanced | 5K | ~$0.05 | ~$0.15 |

| Deep | 15K | ~$0.15 | ~$0.45 |

| Paranoid | 40K | ~$0.40 | ~$1.20 |

Consider cost when choosing profiles, but don’t under-analyze high-stakes decisions to save money.

Related

Quick Profile

Fast sanity check in ~10 seconds

The Quick profile provides a rapid analysis for low-stakes, easily reversible decisions.

When to Use

- Decision is easily reversible

- Stakes are low (<$100, no major consequences)

- Time is limited

- You just need a sanity check

- Initial exploration before deeper analysis

Tools Included

| Tool | Settings |

|---|---|

| 💡 GigaThink | 5 perspectives |

| ⚡ LaserLogic | Light depth |

Usage

# Full form

rk think "question" --profile quick

# Shorthand

rk think "question" --quick

Example

Question: “Should I buy this $30 kitchen gadget?”

╔════════════════════════════════════════════════════════════╗

║ QUICK ANALYSIS ║

║ Time: 28 seconds ║

╚════════════════════════════════════════════════════════════╝

┌────────────────────────────────────────────────────────────┐

│ 💡 GIGATHINK: 5 Quick Perspectives │

├────────────────────────────────────────────────────────────┤

│ 1. UTILITY: Will you actually use it more than twice? │

│ 2. SPACE: Do you have room for another kitchen tool? │

│ 3. QUALITY: Is it well-reviewed or cheap junk? │

│ 4. ALTERNATIVE: Could existing tools do this job? │

│ 5. IMPULSE: Are you buying it or being sold it? │

└────────────────────────────────────────────────────────────┘

┌────────────────────────────────────────────────────────────┐

│ ⚡ LASERLOGIC: Quick Check │

├────────────────────────────────────────────────────────────┤

│ FLAW: "I might use it someday" │

│ → Kitchen drawer full of "someday" gadgets │

│ → If you haven't needed it before, you probably won't │

└────────────────────────────────────────────────────────────┘

VERDICT: Skip it. Low stakes but also low value.

Appropriate Decisions

- Small purchases (<$100)

- What to eat for dinner

- Which movie to watch

- Minor work decisions

- Social plans

Not Appropriate For

- Job changes

- Major purchases (>$500)

- Relationship decisions

- Health decisions

- Anything with lasting consequences

Upgrading Analysis

If Quick analysis reveals complexity, upgrade:

# Started with quick, found it's actually complex

rk think "question" --balanced

Configuration

[profiles.quick]

tools = ["gigathink", "laserlogic"]

gigathink_perspectives = 5

laserlogic_depth = "light"

timeout = 30

Cost

~2K tokens ≈ $0.02 (Claude) / $0.06 (GPT-4)

Related

- Profiles Overview

- Balanced Profile — For more important decisions

Balanced Profile

Standard analysis in ~20 seconds

The Balanced profile is the default—thorough enough for most decisions, fast enough to be practical.

When to Use

- Important decisions with moderate stakes

- Job offers, career moves

- Purchases $100-$10,000

- Relationship discussions

- Business decisions

- Most everyday important choices

Tools Included

| Tool | Settings |

|---|---|

| 💡 GigaThink | 10 perspectives |

| ⚡ LaserLogic | Standard depth |

| 🪨 BedRock | Full decomposition |

| 🛡️ ProofGuard | 3 sources minimum |

| 🔥 BrutalHonesty | Medium severity |

Usage

# Full form

rk think "question" --profile balanced

# Shorthand (default)

rk think "question" --balanced

# Also the default

rk think "question"

Example

Question: “Should I accept this job offer with 20% higher salary but longer commute?”

╔════════════════════════════════════════════════════════════╗

║ BALANCED ANALYSIS ║

║ Time: 1 minute 47 seconds ║

╚════════════════════════════════════════════════════════════╝

┌────────────────────────────────────────────────────────────┐

│ 💡 GIGATHINK: 10 Perspectives │

├────────────────────────────────────────────────────────────┤

│ 1. FINANCIAL: 20% raise minus commute costs │

│ 2. TIME: Extra commute hours per week/year │

│ 3. CAREER: Growth potential at new company │

│ 4. MANAGER: Who will you report to? │

│ 5. TEAM: Culture and people you'll work with │

│ 6. HEALTH: Commute stress and lost exercise time │

│ 7. FAMILY: Impact on family time and responsibilities │

│ 8. OPPORTUNITY: Is this the best option available? │

│ 9. REVERSIBILITY: Can you go back if it doesn't work? │